Errors-in-variables models

In statistics and econometrics, errors-in-variables models or measurement errors models are regression models that account for measurement errors in the independent variables. In contrast, standard regression models assume that those regressors have been measured exactly, or observed without error; as such, those models account only for errors in the dependent variables, or responses.

In the case when some regressors have been measured with errors, estimation based on the standard assumption leads to inconsistent estimates, meaning that the parameter estimates do not tend to the true values even in very large samples. For simple linear regression the effect is an underestimate of the coefficient, known as the attenuation bias. In non-linear models the direction of the bias is likely to be more complicated.[1]

Contents |

Motivational example

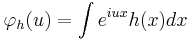

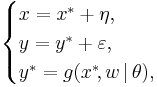

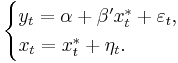

Consider a simple linear regression model of the form

where x* denotes the true but unobserved value of the regressor. Instead we observe this value with an error:

where the measurement error ηt is assumed to be independent from the true value x*t.

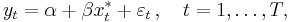

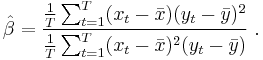

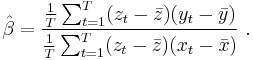

If the yt′s are simply regressed on the xt′s (see simple linear regression), then the estimator for the slope coefficient is

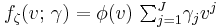

which converges as the sample size T increases without bound:

The two variances here are positive, so that in the limit the estimate is smaller in magnitude than the true value of β, an effect which statisticians call attenuation or regression dilution.[2] Thus the “naїve” least squares estimator is inconsistent in this setting. However, the estimator is a consistent estimator of the parameter required for a best linear predictor of y given x: in some applications this may be what is required, rather than an estimate of the "true" regression coefficient, although that what assume that the variance of the errors in observing x* remains fixed.

It can be argued that almost all existing data sets contain errors of different nature and magnitude, so that attenuation bias is extremely frequent (although in multivariate regression the direction of bias is ambiguous). Jerry Hausman sees this as an iron law of econometrics: “The magnitude of the estimate is usually smaller than expected.”[3]

Specification

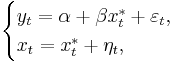

Usually measurement error models are described using the latent variables approach. If y is the response variable and x are observed values of the regressors, then we assume there exist some latent variables y* and x* which follow the model's “true” functional relationship g, and such that the observed quantities are their noisy observations:

where θ is the model's parameter and w are those regressors which are assumed to be error-free (for example when linear regression contains an intercept, the regressor which corresponds to the constant certainly has no “measurement errors”). Depending on the specification these error-free regressors may or may not be treated separately; in the latter case it is simply assumed that corresponding entries in the variance matrix of η's are zero.

The variables y, x, w are all observed, meaning that the statistician possesses a data set of n statistical units {yi, xi, wi}i = 1, ..., n which follow the data generating process described above; the latent variables x*, y*, ε, and η are not observed however.

This specification does not encompass all the existing EiV models. For example in some of them function g may be non-parametric or semi-parametric. Other approaches model the relationship between y* and x* as distributional instead of functional, that is they assume that y* conditionally on x* follows a certain (usually parametric) distribution.

Terminology and assumptions

- The observed variable x may be called the manifest, indicator, or proxy variable.

- The unobserved variable x* may be called the latent or true variable. It may be regarded either as an unknown constant (in which case the model is called a functional model), or as a random variable (correspondingly a structural model).[4]

- The relationship between the measurement error η and the latent variable x* can be modeled in different ways:

- Classical errors:

the errors are independent from the latent variable. This is the most common assumption, it implies that the errors are introduced by the measuring device and their magnitude does not depend on the value being measured.

the errors are independent from the latent variable. This is the most common assumption, it implies that the errors are introduced by the measuring device and their magnitude does not depend on the value being measured. - Mean-independence:

![\scriptstyle\operatorname{E}[\eta|x^*]\,=\,0,](/2012-wikipedia_en_all_nopic_01_2012/I/93768ad7a9b105abf7b3170201c4339e.png) the errors are mean-zero for every value of the latent regressor. This is a less restrictive assumption than the classical one, as it allows for the presence of heteroscedasticity or other effects in the measurement errors.

the errors are mean-zero for every value of the latent regressor. This is a less restrictive assumption than the classical one, as it allows for the presence of heteroscedasticity or other effects in the measurement errors. - Berkson’s errors:

the errors are independent from the observed regressor x. This assumption has very limited applicability. One example is round-off errors: for example if a person’s age* is a continuous random variable, whereas the observed age is truncated to the next smallest integer, then the truncation error is approximately independent from the observed age. Another possibility is with the fixed design experiment: for example if a scientist decides to make a measurement at a certain predetermined moment of time x, say at x = 10 s, then the real measurement may occur at some other value of x* (for example due to her finite reaction time) and such measurement error will be generally independent from the “observed” value of the regressor.

the errors are independent from the observed regressor x. This assumption has very limited applicability. One example is round-off errors: for example if a person’s age* is a continuous random variable, whereas the observed age is truncated to the next smallest integer, then the truncation error is approximately independent from the observed age. Another possibility is with the fixed design experiment: for example if a scientist decides to make a measurement at a certain predetermined moment of time x, say at x = 10 s, then the real measurement may occur at some other value of x* (for example due to her finite reaction time) and such measurement error will be generally independent from the “observed” value of the regressor. - Misclassification errors: special case used for the dummy regressors. If x* is an indicator of a certain event or condition (such as person is male/female, some medical treatment given/not, etc.), then the measurement error in such regressor will correspond to the incorrect classification similar to type I and type II errors in statistical testing. In this case the error η may take only 3 possible values, and its distribution conditional on x* is modeled with two parameters: α = Pr[η=−1 | x*=1], and β = Pr[η=1 | x*=0]. The necessary condition for identification is that α+β<1, that is misclassification should not happen “too often”. (This idea can be generalized to discrete variables with more than two possible values.)

- Classical errors:

Linear model

Linear errors-in-variables models were studied first, probably because linear models were so widely used and they are easier than non-linear ones. Unlike standard least squares regression (OLS), extending errors in variables regression (EiV) from the simple to the multivariate case is not straightforward.

Simple linear model

The simple linear errors-in-variables model was already presented in the “motivation” section:

where all variables are scalar. Here α and β are the parameters of interest, whereas σε and ση — standard deviations of the error terms — are the nuisance parameters. The “true” regressor x* is treated as a random variable (structural model), independent from the measurement error η (classic assumption).

This model is identifiable in two cases: (1) either the latent regressor x* is not normally distributed, (2) or x* has normal distribution, but neither εt nor ηt are divisible by a normal distribution.[5] That is, the parameters α, β can be consistently estimated from the data set  without any additional information, provided the latent regressor is not Gaussian.

without any additional information, provided the latent regressor is not Gaussian.

Before this identifiability result was established, statisticians attempted to apply the maximum likelihood technique by assuming that all variables are normal, and then concluded that the model is not identified. The suggested remedy was to assume that some of the parameters of the model are known or can be estimated from the outside source. Such estimation methods include:[6]

- Deming regression — assumes that the ratio δ = σ²ε/σ²η is known. This could be appropriate for example when errors in y and x are both caused by measurements, and the accuracy of measuring devices or procedures are known. The case when δ = 1 is also known as the orthogonal regression.

- Regression with known reliability ratio λ = σ²∗/ ( σ²η + σ²∗), where σ²∗ is the variance of the latent regressor. Such approach may be applicable for example when repeating measurements of the same unit are available, or when the reliability ratio has been known from the independent study. In this case the consistent estimate of slope is equal to the least-squares estimate divided by λ.

- Regression with known σ²η may occur when the source of the errors in x’s is known and their variance can be calculated. This could include rounding errors, or errors introduced by the measuring device. When σ²η is known we can compute the reliability ratio as λ = ( σ²x − σ²η) / σ²x and reduce the problem to the previous case.

Newer estimation methods that do not assume knowledge of some of the parameters of the model, include:

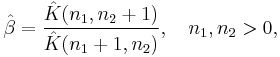

- Method of moments — the GMM estimator based on the third- (or higher-) order joint cumulants of observable variables. The slope coefficient can be estimated from [7]

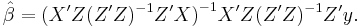

- Instrumental variables — a regression which requires that certain additional data variables z, called instruments, were available. These variables should be uncorrelated with the errors in the equation for the dependent variable, and they should also be correlated (relevant) with the true regressors x*. If such variables can be found then the estimator takes form

Multivariate linear model

Multivariate model looks exactly like the linear model, only this time β, ηt, xt and x*t are k×1 vectors.

The general identifiability condition for this model remains an open question. It is known however that in the case when (ε,η) are independent and jointly normal, the parameter β is identified if and only if it is impossible to find a non-singular k×k block matrix [a A] (where a is a k×1 vector) such that a′x* is distributed normally and independently from A′x*.[8]

Some of the estimation methods for multivariate linear models are:

- Total least squares is an extension of Deming regression to the multivariate setting. When all the k+1 components of the vector (ε,η) have equal variances and are independent, this is equivalent to running the orthogonal regression of y on the vector x — that is, the regression which minimizes the sum of squared distances between points (yt,xt) and the k-dimensional hyperplane of “best fit”.

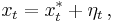

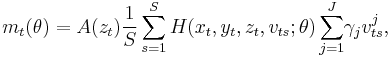

- The method of moments estimator [9] can be constructed based on the moment conditions E[zt·(yt − α − β'xt)] = 0, where the (5k+3)-dimensional vector of instruments zt is defined as

This method can be extended to use moments higher than the third order, if necessary, and to accommodate variables measured without error.[11] - The instrumental variables approach requires to find additional data variables zt which would serve as instruments for the mismeasured regressors xt. This method is the simplest from the implementation point of view, however its disadvantage is that it requires to collect additional data, which may be costly or even impossible. When the instruments can be found, the estimator takes standard form

Non-linear models

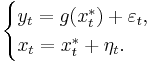

A generic non-linear measurement error model takes form

Here function g can be either parametric or non-parametric. When function g is parametric it will be written as g(x*, β).

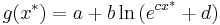

For a general vector-valued regressor x* the conditions for model identifiability are not known. However in the case of scalar x* the model is identified unless the function g is of the “log-exponential” form [12]

and the latent regressor x* has density

where constants A,B,C,D,E,F may depend on a,b,c,d.

Despite this optimistic result, as of now no methods exist for estimating non-linear errors-in-variables models without any extraneous information. However there are several techniques which make use of some additional data: either the instrumental variables, or repeated observations.

Instrumental variables methods

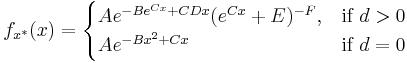

- Newey’s simulated moments method [13] for parametric models — requires that there is an additional set of observed predictor variabels zt, such that the true regressor can be expressed as

Repeated observations

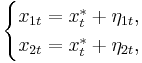

In this approach two (or maybe more) repeated observations of the regressor x* are available. Both observations contain their own measurement errors, however those errors are required to be independent:

where x* ⊥ η1 ⊥ η2. Variables η1, η2 need not be identically distributed (although if they are efficiency of the estimator can be slightly improved). With only these two observations it is possible to consistently estimate the density function of x* using Kotlarski’s deconvolution technique.[14]

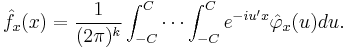

- Li’s conditional density method[15] for parametric models. The regression equation can be written in terms of the observable variables as

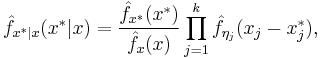

Assuming for simplicity that η1, η2 are identically distributed, this conditional density can be computed as

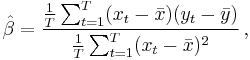

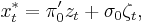

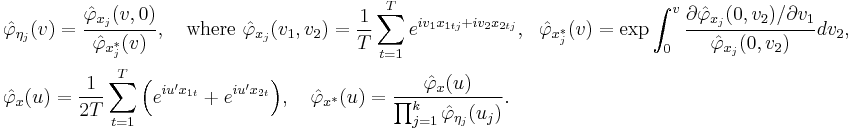

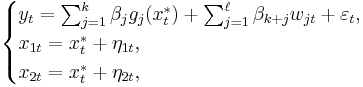

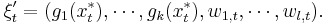

All densities in this formula can be estimated using inversion of the empirical characteristic functions. In particular, - Schennach’s estimator[16] for a parametric linear-in-parameters nonlinear-in-variables model. This is a model of the form

If not for the measurement errors, this would have been a standard linear model with the estimator ,

,

is consistent and asymptotically normal.

is consistent and asymptotically normal. - Schennach’s estimator [17] for a nonparametric model. The standard Nadaraya–Watson estimator for a nonparametric model takes form

Further reading

- Chen, Hong & Nekipelov 2009

- Soderstrom 2007

- An Historical Overview of Linear Regression with Errors in both Variables, J.W. Gillard 2006

Notes

- ^ Griliches & Ringstad 1970, Chesher 1991

- ^ Greene 2003, Chapter 5.6.1

- ^ Hausman 2001, p. 58

- ^ Fuller 1987, p. 2

- ^ Reiersøl 1950, p. 383. A somewhat more restrictive result was established earlier by R. C. Geary in “Inherent relations between random variables”, Proceedings of Royal Irish Academy, vol.47 (1950). He showed that under the additional assumption that (ε, η) are jointly normal, the model is not identified if and only if x*’s are normal.

- ^ Fuller 1987, ch. 1

- ^ Pal 1980, §6

- ^ Bekker 1986. An earlier proof by Y. Willassen in “Extension of some results by Reiersøl to multivariate models”, Scand. J. Statistics, 6(2) (1979) contained errors.

- ^ Dagenais & Dagenais 1997. In the earlier paper (Pal 1980) considered a simpler case when all components in vector (ε, η) are independent and symmetrically distributed.

- ^ Fuller 1987, p. 184

- ^ Erickson & Whited 2002

- ^ Schennach, Hu & Lewbel 2007

- ^ Newey 2001

- ^ Li & Vuong 1998

- ^ Li 2002

- ^ Schennach 2004a

- ^ Schennach 2004b

References

- Bekker, Paul A. (1986), "Comment on identification in the linear errors in variables model", Econometrica 54 (1): 215–217, doi:10.2307/1914166, JSTOR 1914166

- Chen X., Hong H., and Nekipelov D. (2009), Nonlinear models of measurement errors, Working paper, http://www.stanford.edu/~doubleh/papers/survey-round2.pdf.

- Chesher, Andrew (1991), "The effect of measurement error", Biometrika 78 (3): 451–462, doi:10.1093/biomet/78.3.451, JSTOR 2337015

- Dagenais, Marcel G.; Dagenais, Denyse L. (1997), "Higher moment estimators for linear regression models with errors in the variables", Journal of Econometrics 76: 193–221, doi:10.1016/0304-4076(95)01789-5

- Erickson, Timothy; Whited, Toni M. (2002), "Two-step GMM estimation of the errors-in-variables model using high-order moments", Econometric Theory 18 (3): 776–799, JSTOR 3533649

- Fuller, Wayne A. (1987), Measurement error models, John Wiley & Sons, Inc, ISBN 0-471-86187-1

- Greene, William H. (2003), Econometric analysis (5th ed.), New Jersey: Prentice Hall, ISBN 0130661899, LCCN 2002 HB139.G74 2002

- Griliches, Zvi; Hausman, Jerry A. (1986), "Errors in variables in panel data", Journal of Econometrics 31 (1): 93–118, doi:10.1016/0304-4076(86)90058-8

- Griliches, Zvi; Ringstad, Vidar (1970), "Errors-in-the-variables bias in nonlinear contexts", Econometrica 38 (2): 368–370, doi:10.2307/1913020, JSTOR 1913020

- Hausman, Jerry A. (2001), "Mismeasured variables in econometric analysis: problems from the right and problems from the left", The Journal of Economic Perspectives 15 (4): 57–67, doi:10.1257/jep.15.4.57, JSTOR 2696516

- Hong, Han; Tamer, Elie (2003), "A simple estimator for nonlinear error in variable models", Journal of Econometrics 117 (1): 1–19, doi:10.1016/S0304-4076(03)00116-7

- Jung, Kang-Mo (2007) "Least Trimmed Squares Estimator in the Errors-in-Variables Model", Journal of Applied Statistics, 34 (3), 331–338. doi: 10.1080/02664760601004973

- Kummell, C. H. (1879), "Reduction of observation equations which contain more than one observed quantity", The Analyst 6 (4): 97–105, doi:10.2307/2635646, JSTOR 2635646

- Li, Tong (2002), "Robust and consistent estimation of nonlinear errors-in-variables models", Journal of Econometrics 110 (1): 1–26, doi:10.1016/S0304-4076(02)00120-3

- Li, Tong; Vuong, Quang (1998), "Nonparametric estimation of the measurement error model using multiple indicators", Journal of Multivariate Analysis 65 (2): 139–165, doi:10.1006/jmva.1998.1741

- Newey, Whitney K. (2001), "Flexible simulated moment estimation of nonlinear errors-in-variables model", The review of economics and statistics 83 (4): 616–627, doi:10.1162/003465301753237704, JSTOR 3211757

- Pal, Manoranjan (1980), "Consistent moment estimators of regression coefficients in the presence of errors in variables", Journal of Econometrics 14 (3): 349–364, doi:10.1016/0304-4076(80)90032-9

- Reiersøl, Olav (1950), "Identifiability of a linear relation between variables which are subject to error", Econometrica 18 (4): 375–389, doi:10.2307/1907835, JSTOR 1907835

- Schennach, Susanne M. (2004), "Estimation of nonlinear models with measurement error", Econometrica 72 (1): 33–75, doi:10.1111/j.1468-0262.2004.00477.x, JSTOR 3598849.

- Schennach, Susanne M. (2004), "Nonparametric regression in the presence of measurement error", Econometric Theory 20 (6): 1046–1093, doi:10.1017/S0266466604206028.

- Schennach S., Hu Y., Lewbel A. (2007), Nonparametric identification of the classical errors-in-variables model without side information, Working paper, http://escholarship.bc.edu/cgi/viewcontent.cgi?article=1433&context=econ_papers.

- Söderström, Torsten (2007), "Errors-in-variables methods in system identification", Automatica 43 (6): 939–958, doi:10.1016/j.automatica.2006.11.025

![\hat\beta\ \xrightarrow{p}\

\frac{\operatorname{Cov}[\,x_t,y_t\,]}{\operatorname{Var}[\,x_t\,]}

= \frac{\beta \sigma^2_{x^*}} {\sigma_{x^*}^2 %2B \sigma_\eta^2}

= \frac{\beta} {1 %2B \sigma_\eta^2/\sigma_{x^*}^2}\,.](/2012-wikipedia_en_all_nopic_01_2012/I/eecadfafcd251caa20dfd1fe06add3da.png)

![\begin{align}

& z_t = \left( 1\ z_{t1}'\ z_{t2}'\ z_{t3}'\ z_{t4}'\ z_{t5}'\ z_{t6}'\ z_{t7}' \right)', \quad \text{where} \\

& z_{t1} = x_t \ast x_t \\

& z_{t2} = x_t y_t \\

& z_{t3} = y_t^2 \\

& z_{t4} = x_t \ast x_t \ast x_t - 3\big(\operatorname{E}[x_tx_t']\ast I_k\big)x_t \\

& z_{t5} = x_t \ast x_t y_t - 2\big(\operatorname{E}[y_tx_t']\ast I_k\big)x_t - y_t\big(\operatorname{E}[x_tx_t']\ast I_k\big)\iota_k \\

& z_{t6} = x_t y_t^2 - \operatorname{E}[y_t^2]x_t - 2y_t\operatorname{E}[x_ty_t] \\

& z_{t7} = y_t^3 - 3y_t\operatorname{E}[y_t^2]

\end{align}](/2012-wikipedia_en_all_nopic_01_2012/I/c2763a8b239384bff4d7e3a9faf0c681.png)

![\operatorname{E}[\,y_t|x_t\,] = \int g(x^*_t,\beta) f_{x^*|x}(x^*_t|x_t)dx^*_t ,](/2012-wikipedia_en_all_nopic_01_2012/I/5980fcd083565c2d977f6f2dd264f80f.png)

![\hat{\beta} = \big(\hat{\operatorname{E}}[\,\xi_t\xi_t'\,]\big)^{-1} \hat{\operatorname{E}}[\,\xi_t y_t\,],](/2012-wikipedia_en_all_nopic_01_2012/I/dc285f97eb97886a94c8981ebb2193c6.png)

![\operatorname{E}[\,w_th(x^*_t)\,] = \frac{1}{2\pi} \int_{-\infty}^\infty \varphi_h(-u)\psi_w(u)du,](/2012-wikipedia_en_all_nopic_01_2012/I/595ec2123f593d57df224201ff2de67e.png)

![\psi_w(u) = \operatorname{E}[\,w_te^{iux^*}\,]

= \frac{\operatorname{E}[w_te^{iux_{1t}}]}{\operatorname{E}[e^{iux_{1t}}]}

\exp \int_0^u i\frac{\operatorname{E}[x_{2t}e^{ivx_{1t}}]}{\operatorname{E}[e^{ivx_{1t}}]}dv](/2012-wikipedia_en_all_nopic_01_2012/I/1e1e597261034253c9e79915b07d112b.png)

![\hat{g}(x) = \frac{\hat{\operatorname{E}}[\,y_tK_h(x^*_t - x)\,]}{\hat{\operatorname{E}}[\,K_h(x^*_t - x)\,]},](/2012-wikipedia_en_all_nopic_01_2012/I/dd9999b513c095cb79de37314ad69c04.png)